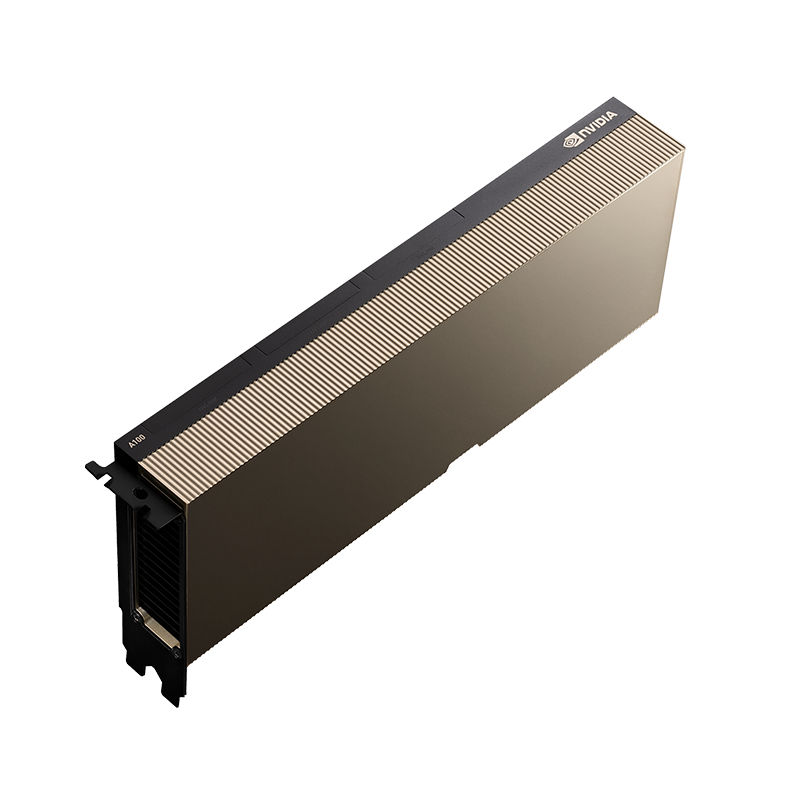

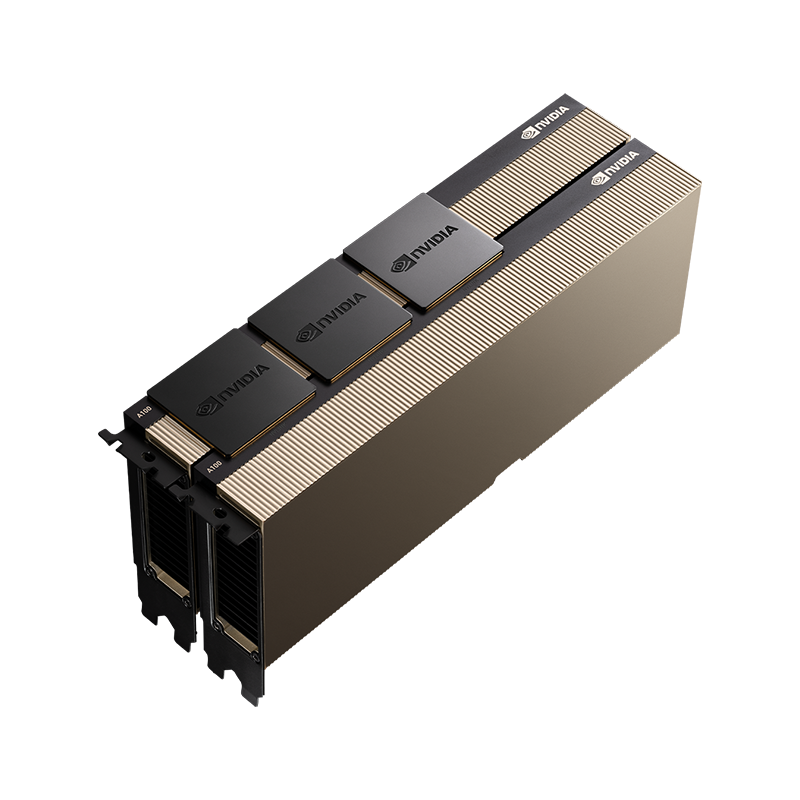

NVIDIA A100 80GB

Unprecedented Acceleration for World’s Highest-Performing Elastic Data Centers

The NVIDIA A100 80GB Tensor Core GPU delivers unprecedented acceleration—at every scale—to power the world’s highest performing elastic data centers for AI, data analytics, and high-performance computing (HPC) applications. As the engine of the NVIDIA data center platform, A100 provides up to 20x higher performance over the prior NVIDIA Volta generation. A100 can efficiently scale up or be partitioned into seven isolated GPU instances, with Multi-Instance GPU (MIG) providing a unified platform that enables elastic data centers to dynamically adjust to shifting workload demands.

A100 is part of the complete NVIDIA data center solution that incorporates building blocks across hardware, networking, software, libraries, and optimized AI models and applications from NGC. Representing the most powerful end-to-end AI and HPC platform for data centers, it allows researchers to deliver real-world results and deploy solutions into production at scale, while allowing IT to optimize the utilization of every available A100 GPU.

Data Center Class Reliability

Designed for 24 x 7 data center operations and driven by power-efficient hardware and components selected for optimum performance, durability, and longevity. Every NVIDIA A100 board is designed, built and tested by NVIDIA to the most rigorous quality and performance standards, ensuring that leading OEMs and systems integrators can meet or exceed the most demanding real-world conditions.

Secure and measured boot with hardware root of trust technology within the GPU provides an additional layer of security for data centers. A100 meets the latest data center standards and is NEBS Level 3 compliant. The NVIDIA A100 includes a CEC 1712 security chip that enables secure and measured boot with hardware root of trust, ensuring that firmware has not been tampered with or corrupted.

NVIDIA Ampere Architecture

NVIDIA A100 is the world’s most powerful data center GPU for AI, data analytics, and high-performance computing (HPC) applications. Building upon the major SM enhancements from the Turing GPU, the NVIDIA Ampere architecture enhances tensor matrix operations and concurrent executions of FP32 and INT32 operations.

More Efficient CUDA Cores

The NVIDIA Ampere architecture’s CUDA cores bring up to 2.5x the single-precision floating point (FP32) throughput compared to the previous generation, providing significant performance improvements for any class or algorithm or application that can benefit from embarrassingly parallel acceleration techniques.

Third Generation Tensor Cores

Purpose-built for deep learning matrix arithmetic at the heart of neural network training and inferencing functions, the NVIDIA A100 includes enhanced Tensor Cores that accelerate more datatypes (TF32 and BF16) and includes a new Fine-Grained Structured Sparsity feature that delivers up to 2x throughput for tensor matrix operations compared to the previous generation.

PCIe Gen 4

The NVIDIA A100 supports PCI Express Gen 4, which provides double the bandwidth of PCIe Gen 3, improving data-transfer speeds from CPU memory for data-intensive tasks like AI and data science.

High Speed HBM2e Memory

With 80 gigabytes (GB) of high-bandwidth memory (HBM2e), the NVIDIA A100 PCIe delivers improved raw bandwidth of 1.55TB/sec, as well as higher dynamic random access memory (DRAM) utilization efficiency at 95 percent. A100 PCIe delivers 1.7x higher memory bandwidth over the previous generation.

Error Correction Without a Performance or Capacity Hit

HBM2e memory implements error correction without any performance (bandwidth) or capacity hit, unlike competing technologies like GDDR6 or GDDR6X.

Compute Preemption

Preemption at the instruction-level provides finer grain control over compute and tasks to prevent longer-running applications from either monopolizing system resources or timing out.

![A100PCIe_fr[1]](https://i0.wp.com/www.easyshoppi.com/wp-content/uploads/2023/08/A100PCIe_fr1.png?fit=800%2C800&ssl=1)

![a100-pcie-3QTR[1]](https://i0.wp.com/www.easyshoppi.com/wp-content/uploads/2023/08/a100-pcie-3QTR1.png?fit=800%2C800&ssl=1)

![A100PCIe_3QTR-TopLeft-Dual_NVLinks_Black_Alt[1]](https://i0.wp.com/www.easyshoppi.com/wp-content/uploads/2023/08/A100PCIe_3QTR-TopLeft-Dual_NVLinks_Black_Alt1.png?fit=800%2C800&ssl=1)

![A100PCIe_top-2[1]](https://i0.wp.com/www.easyshoppi.com/wp-content/uploads/2023/08/A100PCIe_top-21.png?fit=800%2C800&ssl=1)

![A100PCIe_top[1]](https://i0.wp.com/www.easyshoppi.com/wp-content/uploads/2023/08/A100PCIe_top1.png?fit=800%2C800&ssl=1)

There are no reviews yet.